Example of using Docker compose to run nginx reverse proxy with SSL to expose different WordPress web apps on multiple domains. This content can be easily generalized to serve multiple websites with other kinds of web applications.

Prerequisites

Before starting, you need to check you satisfy the following prerequisites:

- You have sudo privileges on a remote Linux server accessible via ssh. In the server, both the docker and docker-compose must be available.

- You own two domains (or subdomains), and/or you can configure the domain’s DNS records to point your remote server.

As an example, in my case, I have a VPS with Ubuntu Linux, where I installed docker and docker-compose. Then, I checked I can change the A records of two domains that I own.

For more information about setting up the prerequisites, you should check the following articles: Secure root set up for a Linux Server, How to install and use Docker, How to install Docker Compose.

System Design: docker reverse proxy multisite

To set up an Nginx reverse proxy with SSL for exposing web applications on different domains we need to build a system made of two parts:

- a Reverse proxy to accept requests on multiple domains and establish SSL communications

- one or more Web applications to expose. In this example, we use WordPress+Database. (Note that this setup can be generalized by using different web applications.

In this article, each of the above components is implemented using a docker-compose file.

Components

Here is a list of components we need to work with.

- DNS records: we need to point A records to our remote server

- docker network: internal network used by docker containers for communication

- nginx-proxy: fast reverse proxy to route server traffic into the docker network

- letsencrypt companion for reverse proxy: provides https security layer to containers

- multiple WordPress services, each one made of a backend server and a database. (this can be generalized with different web applications)

DNS records

For each domain name, you need to add an A record for that domain/subdomain, and point it to the IP address of your server/VPS.

Docker network

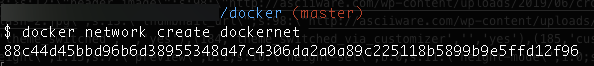

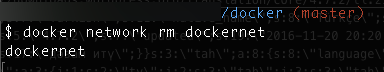

Access to the server via ssh, and create a docker network named docker-net that we’ll use to enable communication between containers.

docker network create dockernet

Nginx reverse https proxy with docker

The proxy and companion containers enable routing and establishing SSL connections.

The NGINX reverse proxy listens to host ports 80 and 443 and routes traffic to containers created with the env variable VIRTUAL_HOST=some.domain.tld

The Letsenctypt proxy companion obtains SSL certificates. Containers needing https just need to specify the variables LETSENCRYPT_HOST and LETSENCRYPT_EMAIL

Add a directory that will contain all your resources, then create and edit a docker-compose.yml file in the folder.

mkdir ~/proxy && cd $_

nano docker-compose.ymlThe code below defines proxy and companion. Copy it in the yml file.

version: '3'

services:

# reverse proxy, see: github.com/jwilder/nginx-proxy

# see: https://github.com/buchdag/letsencrypt-nginx-proxy-companion-compose/blob/master/2-containers/compose-v3/environment/docker-compose.yaml

# see: https://github.com/jwilder/nginx-proxy

nginx-proxy:

image: jwilder/nginx-proxy

container_name: nginx-proxy

ports:

- "80:80"

- "443:443"

volumes:

- ./data/conf:/etc/nginx/conf.d

- ./data/vhost:/etc/nginx/vhost.d

- ./data/html:/usr/share/nginx/html

- ./data/dhparam:/etc/nginx/dhparam

- ./data/certs:/etc/nginx/certs:ro

- /var/run/docker.sock:/tmp/docker.sock:ro

restart: always

# proxy companion to provide and renew SSL certificates

# see: https://github.com/JrCs/docker-letsencrypt-nginx-proxy-companion

letsencrypt:

image: jrcs/letsencrypt-nginx-proxy-companion

container_name: nginx-proxy-le

depends_on:

- nginx-proxy

volumes:

- ./data/vhost:/etc/nginx/vhost.d

- ./data/html:/usr/share/nginx/html

- ./data/dhparam:/etc/nginx/dhparam:ro

- ./data/certs:/etc/nginx/certs

- /var/run/docker.sock:/var/run/docker.sock:ro

environment:

- NGINX_PROXY_CONTAINER=nginx-proxy

restart: always

networks:

default:

external:

name: ${NETWORK}Notes:

- both the containers use network created at the previous step.

- If you need extra server configuration, add any .conf file, like

extra.conf:- under conf.d for settings on a proxy-wide basis

- under vhost.d for settings on a per-virtual-host basis

Add an .env file with properties that will be used by the container with the proxy

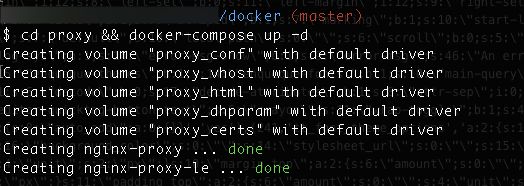

NETWORK=dockernetProxy and companion need to be started before other containers. Start the containers with docker-compose up -d

Extra configuration for the nginx proxy

In this case, we need to tell the server to be able to route files big in size on a proxy-wide basis. So, we add an extra configuration file in the folder ./data/conf

sudo echo 'client_max_body_size 100m;' > extra.confNow, restart the server, and we will be able to route bigger files.

WordPress + DB

In this section, we add two WordPress installations.

WordPress with the first domain

Add a new directory to contain resources for the first WordPress and create a docker-compose.yml .

mkdir site1 && cd $_

nano docker-compose.ymlThe code below defines the WordPress backend and database. Copy it into the yml file.

version: '3'

services:

mariadb:

image: mariadb:latest

volumes:

- ./db_data:/var/lib/mysql

restart: always

environment:

- MYSQL_ROOT_PASSWORD=${DB_ROOT_PASSWORD}

- MYSQL_DATABASE=${DB_NAME}

- MYSQL_USER=${DB_USER}

- MYSQL_PASSWORD=${DB_PASSWORD}

wordpress:

depends_on:

- mariadb

image: wordpress:latest

expose:

- 80

volumes:

- ./wp_core:/var/www/html

- ./uploads.ini:/usr/local/etc/php/conf.d/uploads.ini

restart: always

environment:

- WORDPRESS_DB_HOST=mariadb:3306

- WORDPRESS_DB_NAME=${DB_NAME}

- WORDPRESS_DB_USER=${DB_USER}

- WORDPRESS_DB_PASSWORD=${DB_PASSWORD}

- WORDPRESS_TABLE_PREFIX=wp_

- VIRTUAL_HOST=${SITE_DOMAIN}

- LETSENCRYPT_HOST=${SITE_DOMAIN}

- LETSENCRYPT_EMAIL=${SITE_EMAIL}

networks:

default:

external:

name: ${NETWORK}Note: I am currently unable to make work the nginx-proxy with fastcgi. So, if you use a wordpress*fpm, you’ll get a 502 Bad Gateway error.

Then, add an .env file in the same folder. The env file contains all the information needed by the container.

NETWORK=dockernet

DB_ROOT_PASSWORD=db_pass

DB_NAME=db1

DB_USER=db1user

DB_PASSWORD=db1pass

SITE_DOMAIN=yourdomain.tld

SITE_EMAIL=yourname@yourprovider.comBefore running the composite, we add a file named uploads.ini to configure uploads, and enable large file uploads, as you see below.

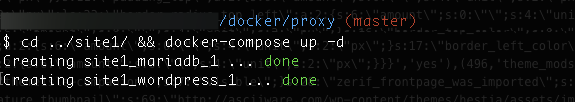

printf "file_uploads = O\nnmemory_limit = 64M\nupload_max_filesize = 64M\npost_max_size = 64M\nmax_execution_time = 600\n" > ./uploads.iniRun the containers with docker-compose up -d, and then open a browser and check your site is working.

WordPress with the second domain

Add a new directory to contain resources for the second WordPress site and create a docker-compose.yml .

mkdir site2 && cd $_

nano docker-compose.ymlRepeat the procedure we did for the first domain but use new service names, and change domain and DB credentials.

Then, run the containers with docker-compose up -d, and open a browser to check the second site is working.

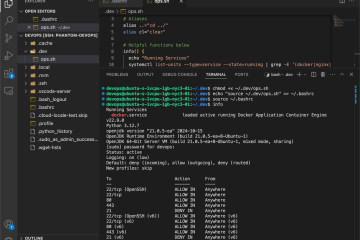

Check the containers

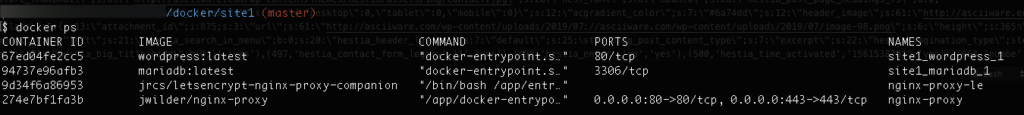

If you followed the procedure correctly, you should see all your containers running in docker. Use the docker ps command to verify

docker psThe result should look like the image below

Backup/Restore

This section shows a quick example of how to backup/restore. Here, the assumption is you know the id of your db and WordPress container. If not, use the docker ps to get their names.

To see more information about backup and restore, please seethe article: WordPress Backup + Restore.

Backup

Here is how to backup the db on a running container.

# perform database backup

docker exec $DB_IMAGE_NAME /usr/bin/mysqldump \

-u $DB_USER \

-p$DB_PASSWORD \

$DB_NAME \

> ${DB_BACKUP_FILE}DB_IMAGE_NAME: name of the image running the container

And here is how we backup the WP data.

# wordpress data backup

docker run --rm --volumes-from $WP_IMAGE_NAME \

-v $BAK_TARGET_DIR:/backup ubuntu \

tar czpf /backup/$WP_BACKUP_NAME.tar.gz $WP_DATA_DIRWP_IMAGE_NAME: name of the image running the containerWP_DATA_DIR: directory you want to backup inside the container, i.e./var/www/site/public_html

Restore

Here is the restore of the DB.

# restore database

cat "$DB_SOURCE_FILE" | docker exec -i $DB_IMAGE_NAME \

/usr/bin/mysql -u$DB_USER -p$DB_PASSWORD $DB_NAME And this is how I restore the WordPress data

# restore wordpress files

docker run --rm \

--volumes-from $WP_IMAGE_NAME \

-v $BAK_SOURCE_DIR:/backup \

ubuntu \

tar xzpf backup/$WP_BACKUP_NAME -C / #$WP_DATA_DIRCopy files from remote to local

Here is how to copy a folder from remote to local using rsync

# copy remote ~/folder to local ./folder

rsync -rh user@123.45.67.890:~/folder/ ./folder/Note: alternatively, you can sftp or scp.

Copy backup files to remote

Here is how to copy a folder from local to remote with rsync

# copy remote ~/folder to local ./folder

rsync -rh ./folder/ user@123.45.67.890:~/folder/Stop and Clean

Stop all running containers

#show all running containers

docker ps -aq

#stop all

docker stop $(docker ps -aq)Remove also the network

doocker network rm dockernet

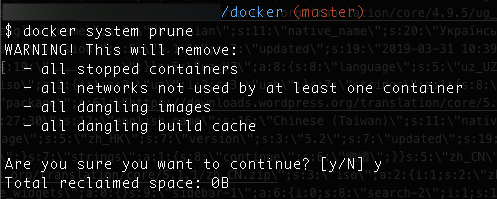

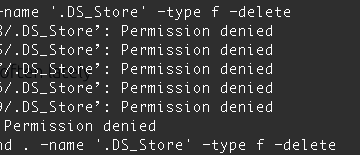

Another way to clear all docker unused resources, including docker images, unused networks, etc is docker system prune

docker system pruneThe console output should look like the image below.

Note: if you also want to remove the volumes, use the --volumes option, and if you want to clean all, use the -a option.

Sample Code

Sample code available on GitHub. In the repository, you’ll find both the docker-compose based approach used in this article, and the docker run based approach

References

- jwilder/niginx-proxy, on GitHub

- Multiple WordPress sites on docker, Autoize

- composerize service

- nginx-proxy redirect and enforce ssl, open issue on GitHub

0 Comments